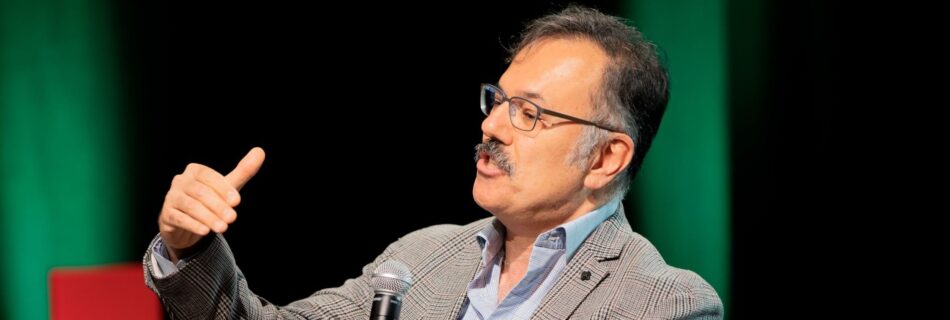

Prof. Babak Falsafi presented a Keynote speech at the inaugural HotInfra conference on June 18th, 2023, in Orlando, Florida: What’s Hot? PDF Loading… Datacenters are the pillars of a digital economy and modern-day global IT services. The building blocks for today’s datacenters are cost-effective volume servers that find their roots […]

Read MoreNews

Monitoring and Management of Data Centers in the Internet-of-Things Era

The Scientific Director of EcoCloud gave a talk at the Intelligent Maintenance Conference which was held at EPFL in September. Prof. David Atienza examined the inefficient monitoring and management of data centers, and how they can impact both energy costs for the sustainability of our planet and the reliability of […]

Read MoreEPFL publishes EcoCloud video

The Associate Vice Presidency for Centers and Platforms have released a series of videos about EPFL centers, including this one – an interview with Prof. David Atienza, Director of EcoCloud:

Read MoreVisiting Speaker on Innovation for Energy-Efficient Deep Learning

Dr. Gourav Datta, who has just finished his PhD at the Viterbi School of Engineering, University of Southern Carolina, gave a talk on: Innovation for Energy-Efficient Deep Learning

Read MoreClearing the clouds, a winning paper a decade on

In 2012 researchers from the Parallel Systems Architecture Laboratory (PARSA) and the Data Intensive Applications & Systems Lab (DIAS) published a hugely ambitious paper entitled Clearing the clouds: a study of emerging scale-out workloads on modern hardware. A decade on, it’s received the Influential Paper Award at this year’s International […]

Read MoreInnovation for Energy-Efficient Deep Learning

Dr. Gourav Datta of University of Southern California Viterbi, Electrical Engineering, will give a presentation on the 1st June at 12 o’clock in lecture theatre BC420, followed by a pizza lunch. In-Sensor & Neuromorphic Computing are all you need for Energy-Efficient Deep Learning This talk will focus on two research […]

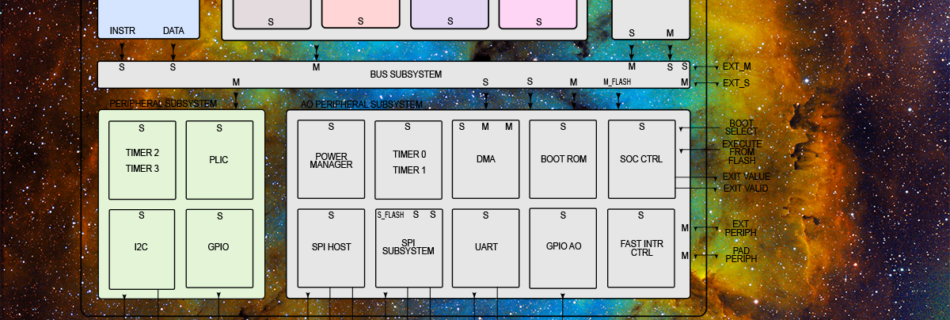

Read Morex-heep – An innovative platform for healthcare device development

The advancement of continuous healthcare monitoring depends on the development of new and more efficient ultra-low power wearable platforms as well as new algorithms. However, the teams that develop the algorithms are usually not the same as those that design the platforms, thus, optimization opportunities are often lost in the […]

Read MoreEPFL Open Days

29th-30th April 2023, Rolex Learning Center IT for Sustainability and Sustainable IT: are both possible? Can IT be a true enabler for a sustainable society, particularly in cities and for urban infrastructure? What are digital twins and are they the answer? How can we use minimal energy for increasing data […]

Read MoreNew EcoCloud brochure is published

Our new brochure featuring articles on our networking with industry, research projects and academic collaborations has now been published. You can preview and download it here: Industry Liaison Brochure

Read MoreHarnessing light

Demetri Psaltis and the return of optical neural networks It is not often that professors from EcoCloud are featured in the pages of The Economist. In an article from December 2022, entitled “Artificial intelligence and the rise of optical computing”, the author had this to say: “The idea of turning […]

Read More