Demands on cloud services continue to increase but the end of Moore’s law means that transistors are no longer getting significantly smaller every year. Heterogeneity is the answer: servers need flexible platforms. Field-programmable gate arrays or FPGAs, are semiconductor devices so adaptable that they can be reconfigured at runtime. Most […]

Read MoreNews

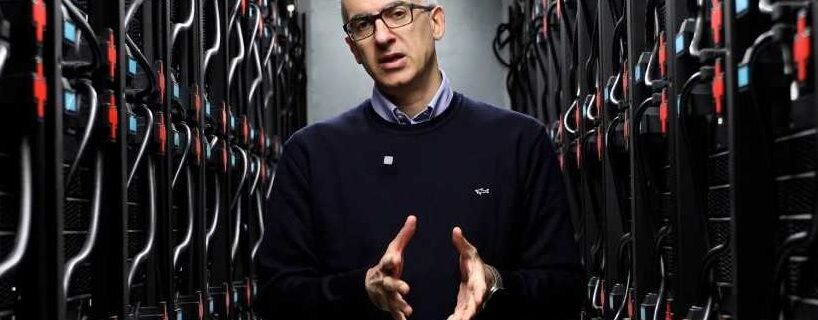

A true measure of datacenter consumption

Prof. Babak Falsafi makes a review of the state-of-the-art in datacenter energy efficiency, and presents his vision of the future. Original article published here in German: Wie effizient sind Rechenzentren wirklich? and in French: Efficacité réelle des centres de données The datacenter market is constantly evolving – and so is […]

Read MoreSwissChips takes off!

A new Swiss initiative is coming: SwissChips will look at growing the domestic production of chips in the face of increasingly inbound production amongst the world’s biggest manufacturers. The fact that Switzerland is currently excluded from many European research and industry programs only makes the drive to develop this local […]

Read MoreOracle Labs Presentation

On the 25th January, 2024, Dr. Sungpack Hong gave a presentation detailing Oracle’s approach to the benefits of AI. This talk focused on Oracle Labs’ role in the rapidly changing world of AI and data analytics. We showcased Oracle’s latest research and developments in these areas, emphasizing how AI is […]

Read MorePredicting the future with CloudProphet

If we are going to reduce the carbon footprint of data centers we need to use computing resources more efficiently. If processes always made use of data center facilities in a regular way, it would be an easy game. However, the resources of a data center are used by customers […]

Read MoreX-HEEP community is growing fast: X-Agora at EPFL

The Internet of Things is expanding at an incredible rate, and consequently demands for ever smaller intelligent hardware are constantly increasing. The Embedded Systems Lab has collaborated with other EPFL teams and EcoCloud to design X-HEEP: a RISC-V chip which is aimed at tiny platforms with big jobs to do. […]

Read MoreDivide and conquer: breaking memory up into compartments to boost security

For computer security, enforcing the principle “do not let your left hand know what your right hand is doing” is vital. Modern computers and smartphones do many things at the same time. For example, you may have a browser with one tab playing a song while in another you are […]

Read MoreFeature on EcoCloud member Zhengmao Lu

Faculty member Prof. Zhengmao Lu is interviewed by Michael Mitchell of EPFL A new addition to EPFL’s Institute of Mechanical Engineering, Zhenmao Lu brings fresh ideas for a sustainable future to the domain of energy transfer. Zhengmao Lu, a newly appointed Tenure Track Assistant Professor at EPFL’s School of Engineering, […]

Read MoreGetting control over our data centers: Heating Bits

The cloud is ever-growing and, as a consequence, data centers are multiplying in size and number. The situation is difficult to manage. To meet demand, outdated and inefficient technology is often being used to provide quick solutions. Cooling systems are frequently unwieldy and energy inefficient, with little or no use […]

Read MoreA Large Language Model for Medical Knowledge

EPFL researchers have just released Meditron, the world’s best performing open source Large Language Model tailored to the medical field designed to help guide clinical decision-making. Large language models (LLMs) are deep learning algorithms trained on vast amounts of text to learn billions of mathematical relationships between words (also called […]

Read More