People are making increasingly large demands of “The Cloud”, from ever larger data backups to multiplying numbers of virtual machines. In turn, this means that data centers need to use more energy to power their infrastructure, and even more energy to keep their machines cool. In order to lower their […]

Read MoreNews

EPFL Swiss Federal Offices Day 2024

On 7 October 2024, this one-day conference will offer a unique opportunity to explore Federal funding programmes and meet their representatives. Federal Offices represent a major source of research funding in Switzerland, in particular at EPFL. They also drive research in specific directions, through topical calls. On October 7, representatives […]

Read MoreEaton are recruiting a Data Center Key Account Manager

Eaton Industries Manufacturing GmbH are recruiting EMEA headquarters in Morges, as well as our teams in Effretikon, Le Lieu, Le Mont-sur-Lausanne, and St. Gallen, develop technically high-quality solutions for industry, energy supply, vehicle construction, trade, housing construction, and information technology. We are involved in many groundbreaking projects in Switzerland and […]

Read MoreThe impact of science continues to grow in our societies

EPFL Professor Anne-Marie Kermarrec has been appointed by French President Emmanuel Macron to the newly established Presidential Science Council. In December 2023 Emmanuel Macron announced the creation of a ‘Presidential Science Council’ to be made up of 14 high-level scientists representing all disciplines. The Elysée Palace announced that the new […]

Read MoreSwissChips – coming to the defense of Swiss chip design

The State Secretariat for Education, Research and Innovation (SERI) is to invest CHF 26 million in the development of chip design, with partners ETHZ, EPFL and CSEM contributing a further CHF 7.8 million. The SwissChips initiative is setting out to defend Switzerland’s position as a world leader in this vital […]

Read MoreEcoCloud and its projects are presented at Data Champion Talks

Miguel Peón presented EcoCloud and many of its projects at the EPFL Data Champion Talks on the 18th April. The title of the day was “Green Bytes: Data-Driven Approaches to EPFL Sustainability”. Projects featured in Miguel’s talk: UrbanTwin Heating Bits Midgard SEAMS Download the Presentation

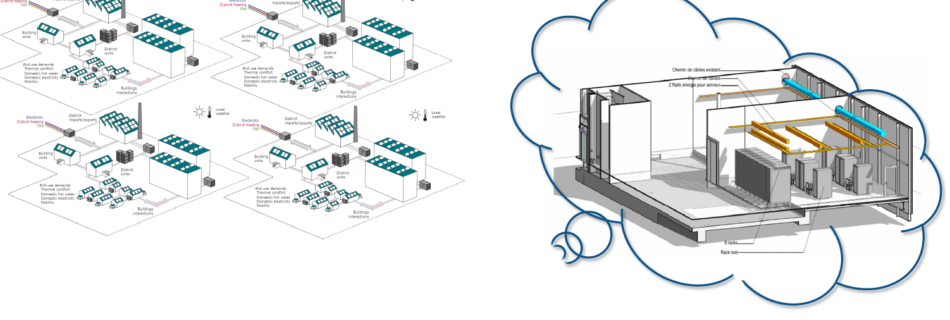

Read MoreOur new experimental facility is up and running

As promised in previous announcements, the new EcoCloud Experimental Space is running, and experiments are underway. The facility is located in the CCT Building, alongside the EPFL Data Center and above the campus central heating plant. There are currently eight racks with passive rear door liquid/air exchangers. A large experimental […]

Read MoreDavid Atienza appointed ACM CSUR Editor-in-Chief

David Atienza, head of the Embedded Systems Lab in the School of Engineering, has been named Editor-in-Chief of the journal Computing Surveys (CSUR) of the Association for Computing Machinery (ACM). CSUR is a peer-reviewed journal that publishes surveys of and tutorials on areas of computing research or practice. With an […]

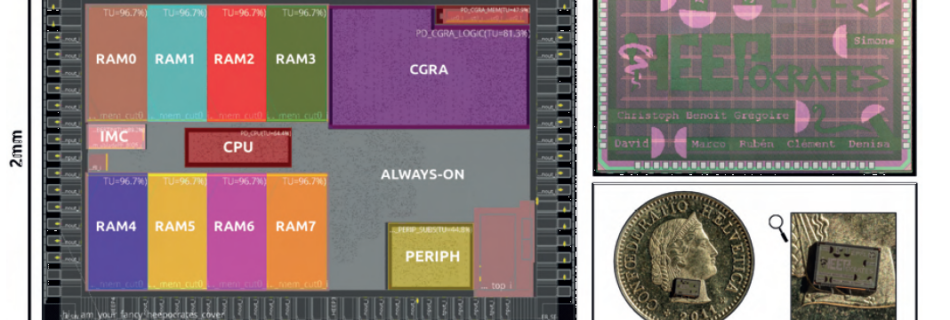

Read MoreHEEPocrates featured in EUROPRACTICE report

An article on the HEEPocrates chip has been published in the EUROPRACTICE Activity Report 2023. EUROPRACTICE was launched by the European Commission in 1995 as a successor of EUROCHIP (1989-1995) to enhance European industrial competitiveness in the global market. A detailed breakdown of the chip and its component processes and […]

Read MoreEPFL Engineering Industry Day

EcoCloud were happy to participate in the School of Engineering’s Industry Day 2024 Drazen Dujic, Elison Matioli, Francois Maréchal, Mario Paolone all presented. David Atienza and Volkan Cevher also made video contributions. Prof. Volkan Cevher and Xavier Ourvrard of EcoCloud Prof. Volkan Cevher of EcoCloud Xavier Ouvrard at the EcoCloud […]

Read More