Having come through a heatwave it is easy to forget that humans are not the only ones that need to shelter from oppressive heat. Animals suffer too, and huge mechanical infrastructures like railways, even major runways. But what about computers?

From the beginning, data centers have faced problems with cooling. In the old days, it was known that Google had a fantastic set-up in their data centers where super computers would be laid out in open rows, in a refrigerated environment. Technicians in California could go and replace velcro-mounted components, while enjoying the cool air for a few refreshing minutes. The real benefit was that the rooms would be kept at a low temperature, to lighten the load on each server’s individual cooling system. Nowadays, however, this would be regarded as extraordinarily inefficient: why waste energy refrigerating an entire room, when it is only the PCs that have to be kept cool?

Prof. Elison Matioli from POWERlab has taken this question a step further. Why should the entire PC be cooled down by having air blown at it, when each individual chip could have its own liquid-cooled system?

As data center demand goes up, so does the cost of cooling

Unless you are unusually well-versed in data center technology, it is unlikely you will be aware of the full extent to which you make use of data centers. If you use Gmail or Outlook.com it is likely that every email you send or receive is stored in a center in the USA; if you have your photos backed up on Apple iCloud or Google Photos, they will be stored in several of the many data centers these companies run all over the world. As more and more people increasingly make use of the Internet-of-Things, from smart cars to smart doorbells to fitness monitors, our data center usage is constantly growing.

At EPFL’s EcoCloud Center we have many professors working on ways to decrease the energy consumption of data centers, even as demand grows and global temperatures increase. At the smallest level, Prof. Moser and Prof. Psaltis have been researching light propagation in optical fibers, and using it to perform practical computational tasks, with much lower energy consumption than traditional digital techniques. At the city-wide level, Prof. Paolone has been building smart grids that turn static power networks into self-regulating, highly-efficient intelligent systems.

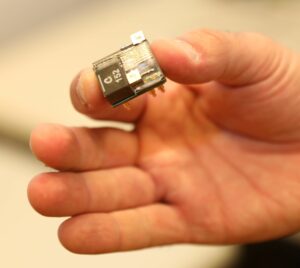

Prof. Elison Matioli is working in between these two extremes, at the level of computer components. “Our vision is to contribute to the development of an integrated chip – a single unit for powered electronics where you have loads of devices integrated – smaller and more energy-efficient than anything that can be achieved currently.”

Microchips continue to get smaller in size, so scientists around the globe are seeking out alternatives to silicon, as the natural limits of this tried and tested material impose themselves. Prof. Matioli has identified the best alternative, but there is an inevitable problem:

"Using Gallium Nitride allows us build electronic devices like power units, memory chips or graphic cards, much smaller than can be achieved with silicon. We can deliver better performances in energy conversion with a much smaller volume but, as a consequence of this, we are generating greater amounts of heat over a smaller surface area.

"It is vital that components do not overheat, nor cause neighbouring devices to overheat."

Cooling revolutionary chip sets like the above became a main focus of Prof. Matioli’s team, and led to some radical solutions. In turn, these solutions opened new possibilities for cooling all kinds of chip sets, including data center infrastructure.

Walking through the lab, it comes across like science fiction.

Just as an astronaut uses a space suit with built-in liquid-cooling, so these microchips are each housed in a liquid-cooled membrane. Cooling with air in the traditional way is fine, but liquid conducts heat faster than air, so the cooling is much more efficient. In the devices being pioneered by POWERlab, microchannels of varying diameter provide a cooling system that is tailored to the needs of each chip, as part of a cooling network designed for the entire machine – the hot spots having been identified in advance. Crucially, it is only the hot spots themselves that are targeted – an ultra-efficient strategy.

Getting mechanical and electronics engineers to work together

This "co-designing" of the ultra-compact microchips and their cooling system makes the approach unique, and beats at the heart of spin-off Corintis SA, a start-up which has evolved out of POWERlab, and is currently recruting.

"Corintis is bringing a service to chip manufacturers, providing them with heat maps for their devices. Their experts can optimise microfluidic cooling while the customer is designing their microchips. They can then design the heat sink in a way that is made to measure for their chipset."

Interdisciplinarity is a key feature to this work: “Very often the departments looking at thermal issues and electronic devices are in different buildings: mechanical engineering and electrical engineering. So you build a chip and then send it to another department to find a way to cool it down. But by this time you have already missed many opportunities!

"In our lab I brought mechanical engineers and electrical engineers to work together," explains Prof. Matioli, "and that is what makes us different."

In discussion with Remco van Erp, CEO of Corintis SA

The annual increase in computing power of general-purpose chips has been slowing down in recent years. Many of the biggest tech companies in the world are now designing their own application-specific chips to meet their future needs: Apple designs chips for their phones and laptops, Amazon designs chips for their data center, and Youtube even designs chips for video processing, there is a large amount of heterogeneity. The custom design of chip-sets can greatly benefit from tailor-made cooling solutions to improve energy efficiency and performance, especially where data centers are concerned. Increasingly, companies are coming to us looking for better cooling solutions.

This is a very multidisciplinary problem, requiring expertise ranging all the way from mathematics to plumbing. At Corintis, we have computational and hardware experts working together to achieve our goals. The modelling aspect is very important, since we want to predict power and temperature distribution, and optimize the microfluidic cooling design, before a chip is even manufactured. It’s also a multi-scale problem: on the one hand, we are dealing with channels at the micrometre scale , on the other it is integrated into chips that are several centimetres big. This requires clever innovations in modelling and simulation.

We keep strong links with EPFL: our microfabrication experts are working in the clean rooms there, we have four interns from EPFL and other international institutions, and we are applying for research funding in collaboration with POWERLab.

Find out more:

POWERlab

https://powerlab.epfl.ch

Corintis

https://corintis.com

EcoCloud

https://ecocloud.epfl.ch

Related publications:

Multichannel nanowire devices for efficient power conversion

Nature, 25 March 2021

Co-designing electronics with microfluidics for more sustainable cooling

Nature, 9 September 2020